In the last few posts, we talked about how to use Llama-2 model for performing different NLP tasks and for most of the cases I have used GPU in Kaggle kernels. Now there can be requirements to that you don’t have GPU and you need to build some apps using CPU only. In this short…

Tag: LLM

Generative AI: LLMs: Reduce Hallucinations with Retrieval-Augmented-Generation (RAG) 1.8

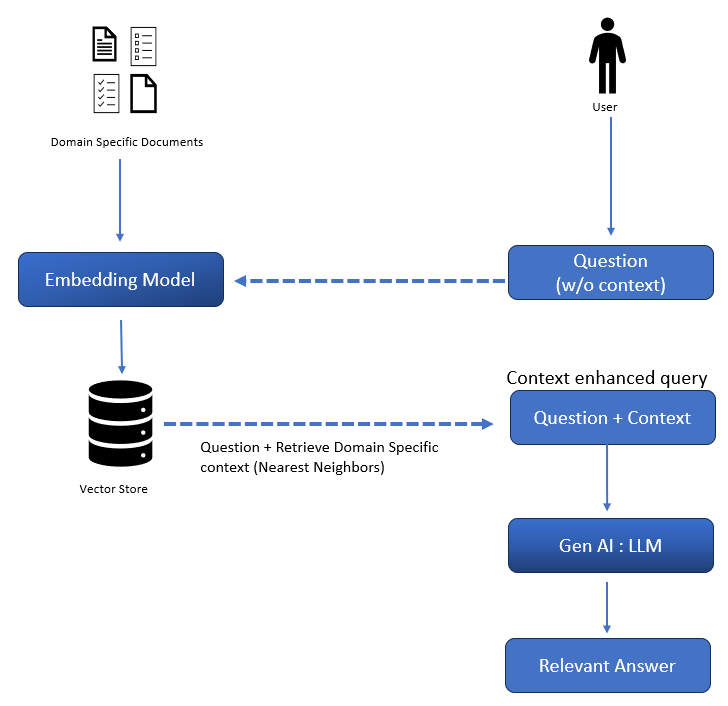

Though there is a huge hype and excitement about LLMs as they are really good at several NLP related tasks, however, they also come with few of the below mentioned issues: Frozen in time – LLMs are “frozen in time” and lack up-to-date information. This is due to the fact that these LLMs are trained with…

Generative AI: LLMs: Semantic Search and Conversation Retrieval QA using Vector Store and LangChain 1.7

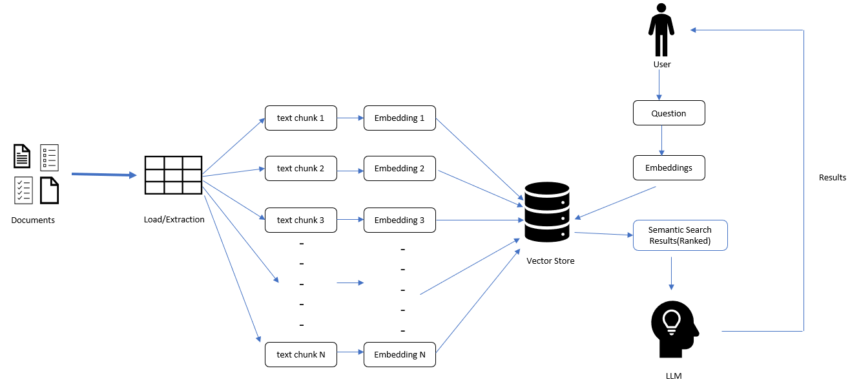

In the last few blogposts, we have gone through the basics of LLMs and different fine-tuning approaches and basics of LangChain. In this post we will mainly work with the embeddings from LLM, how we can store these LLM embeddings in Vector Store and using this persistent vector db how we can do Semantic search….

Generative AI: LLMs: LangChain + Llama-2-chat on Amazon mobile review dataset 1.6

In the last post we talked about in detail how we can fine tune a pretrained Llama-2 model using QLoRA. Llama-2 has two sets of models, first one was the model used in previous blogpost which is pretrained model then there is a instruction finetuned Llama-2 chat model which we will use in this post….

Generative AI: LLMs: Finetuning Llama2 with QLoRA on custom dataset 1.5

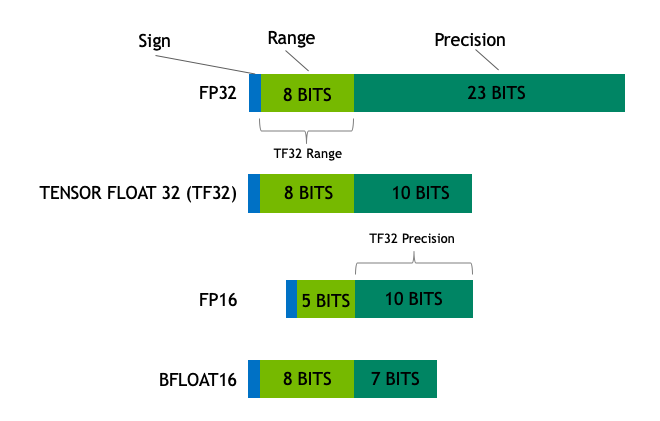

In the last post in this series, we have gone through the inner workings of LoRA fine tuning process. In this blogpost we will use the concepts of LoRA with the quantization method. We will use the newly launched Llama2 which is one of the biggest LLM launch in the history of open-source models. Below…

Generative AI: LLMs: LoRA fine tuning 1.4

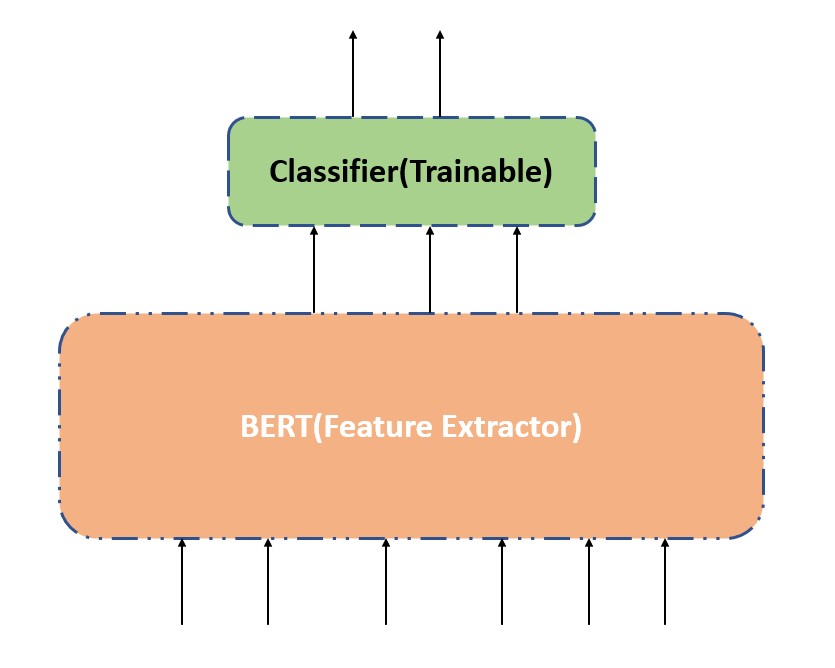

In the last post we discussed two approaches to fine tuning using feature-based method, these options may not be always efficient in terms of computational complexity as well as time complexity. Full fine tuning of any LLM models needs to stitch the below mentioned steps together: Combination of all these steps can produce lot of…

Generative AI: LLMs: Feature base finetuning 1.3

In the last post we talked about how to do In-context finetuning using few shot techniques, In-context finetuning works when we don’t have much data, or we don’t have access to the full model. This technique has certain limitations like the more examples you add in the prompt the context length increases a lot and…

Generative AI: LLMs: Finetuning Approaches 1.1

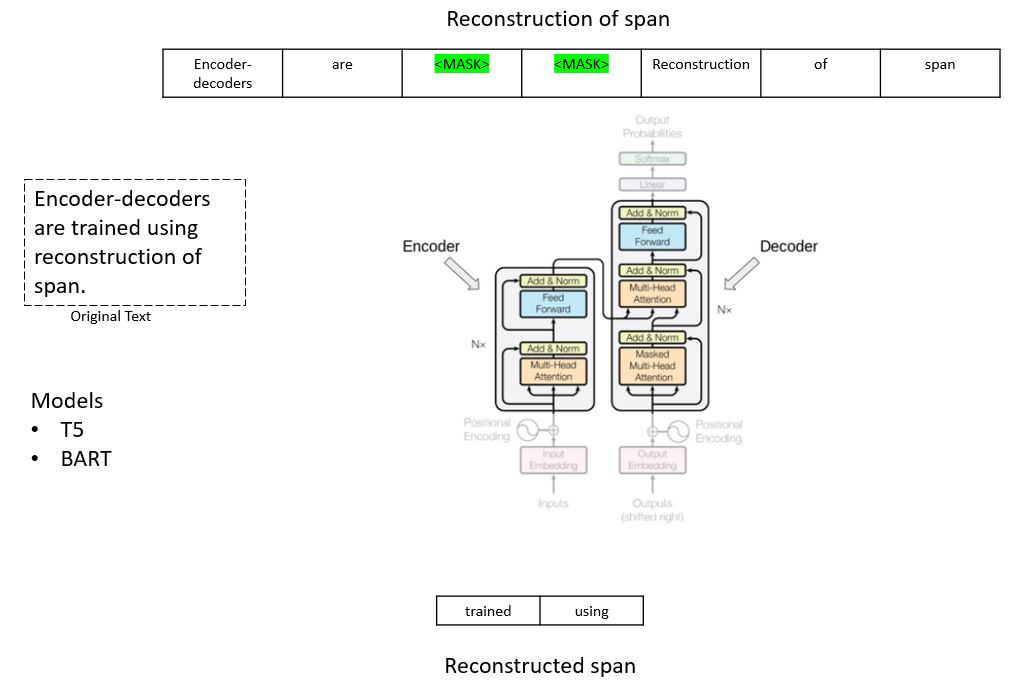

In the last post in this Generative AI with LLMs series we talked about different types of LLM model and how they are generally pre-trained. These Deep Learning language models with large numbers of parameters are generally trained on open-sourced data like Common Crawl, The Pile, MassiveText, blogs, Wikipedia, GitHub etc. These datasets are generally…

Generative AI: LLMs: Getting Started 1.0

With the hype of ChatGPT/LLMs I thought about writing a blog post series on the LLMs. This series would be more on Finetuning LLMs and how we can leverage LLMs to perform NLP tasks. We will start with discussing different types of LLMs, then slowly we will move to fine-tuning of LLMs for specific downstream…